The Effortless Solution: Automating IOCs Lookup Table Updates in Splunk

Are you tired of the tedious process of creating numerous lookup generation saved searches to populate your lookup tables in Splunk? If so, you’re not alone. In this article, I’m excited to present a simple, but powerful solution that will improve your workflow. I’ll introduce you to a versatile approach that streamlines the process of updating multiple lookups dynamically, all with just a single Splunk query. Say goodbye to the burden of manual updates and hello to efficiency and simplicity.

The problem we aim to solve

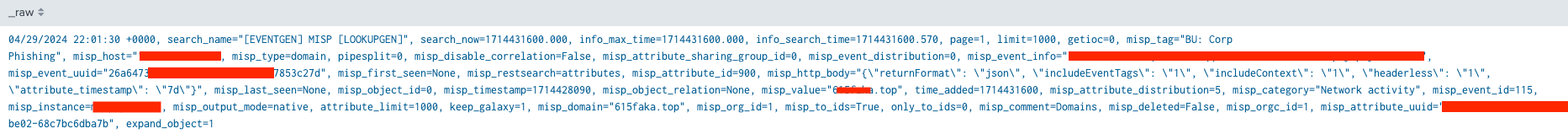

In our scenario, we are collecting various Indicators of Compromise (IOC) from a MISP TI feed into Splunk. As we fetch the data through an API, it arrives in Splunk in raw JSON format, including some metadata information we are not interested in.

To prepare the IOC data for use by the security teams (Threat Hunting, Security Operations, etc.) and consumption by dashboards, it’s important to format it in a more structured way and categorize it by IOC type.

Sample MISP IOC raw event in the format in which it is being indexed in Splunk

Step 1: Create the lookup tables (KV Stores)

Step 1 involves creating the lookup tables where we are going store the IOC data by category. For our use case we will utilize KV stores because they allow us to perform CRUD operations on individual table records.

The indicators will be divided into 5 categories — domains, emails, hashes, IPs, and URLs, each containing the following columns: _key, misp_attribute_uuid, misp_timestamp, misp_type, and misp_value.

The solution I am going to demonstrate is easily scalable, allowing you to add another indicator category or incorporate additional columns as needed without much effort.

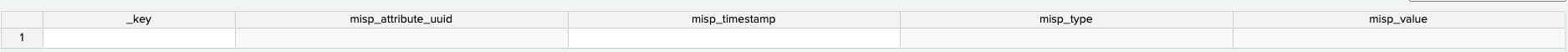

KV stores which will store the IOC data by category

The columns contained within the KV stores

Step 2: Create filtering macro

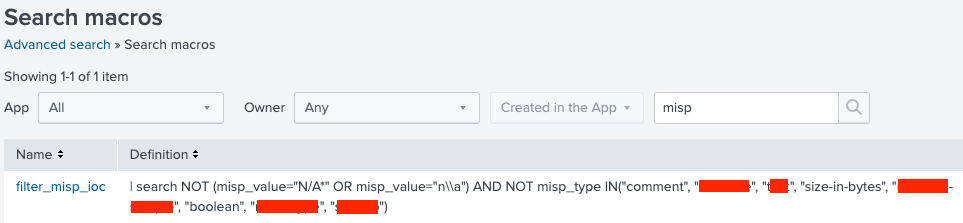

While this step is not mandatory, I highly recommend it as a best practice approach to enhance the flexibility and readability of your Splunk query.

The filtering macro will be used to remove empty MISP values and MISP indicator types that we are currently not interested in.

Filtering macro example

Step 3: Develop Splunk query for dynamic lookup tables update

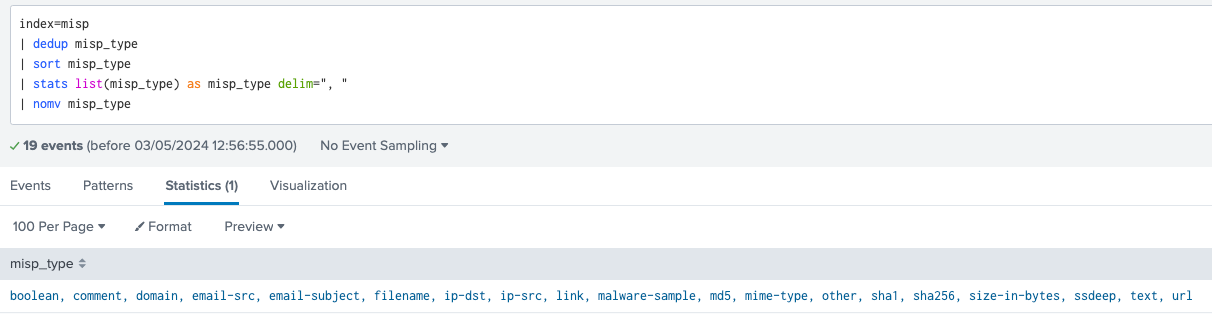

The last step is to craft the Splunk query used for dynamic lookup tables population. Let’s first explore the MISP indicator types we have ingested in Splunk.

MISP indicator types we now have in Splunk

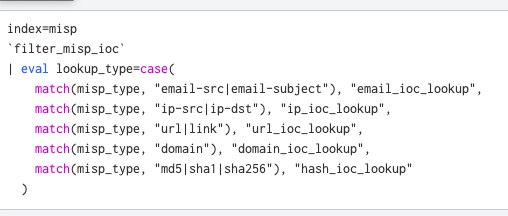

As you can spot, multiple indicator types may refer to the same KV store. For example md5, sha1, and sha256 all belong to the hash_ioc_lookup table. Therefore, we need to determine the common indicators and their corresponding lookup table.

In my opinion, the most painless way to handle this specific problem would be to use the case() function in Splunk. However, I would be happy if you would like to share alternative solutions.

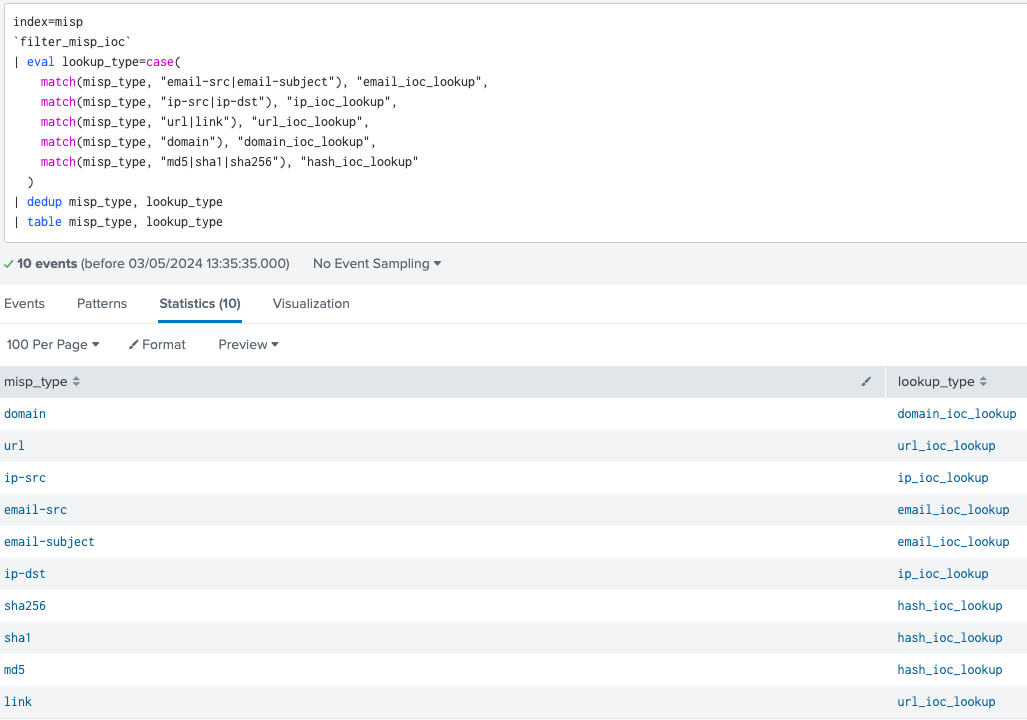

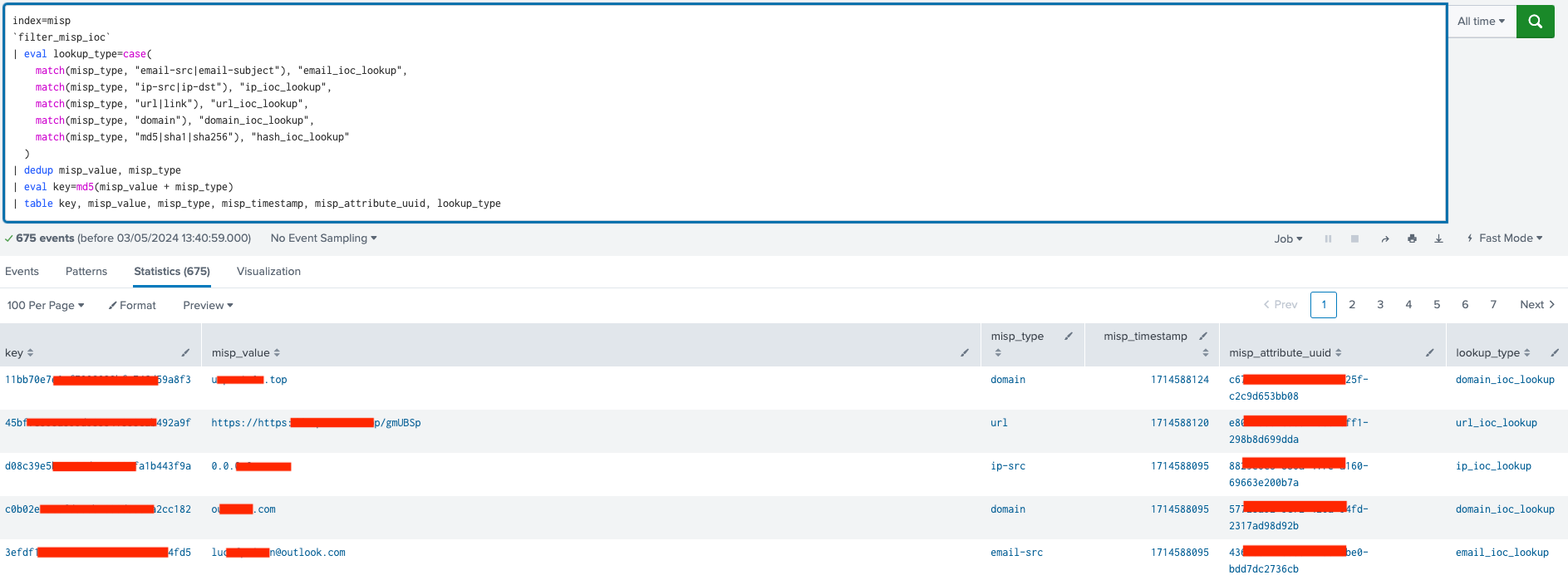

We first filter out empty or unwanted indicators and then we utilize the case() function.

Each MISP event is assigned a new lookup_type field containing the relevant lookup table name. The magic occurs thanks to match() regex function and the logical OR ‘|’ operator, allowing us to match on multiple indicator types.

MISP indicator types and their corresponding lookup tables

The next step is to prepare the MISP data for storage in the lookup tables. We should begin by removing any duplicate combinations of misp_value and misp_type. Then, we create an MD5 hashed key to serve as a unique reference (primary key) for each specific misp_value and misp_type combination. This process ensures that duplicates cannot be written to the KV store in the future.

IOC data ready to be stored in the KV stores

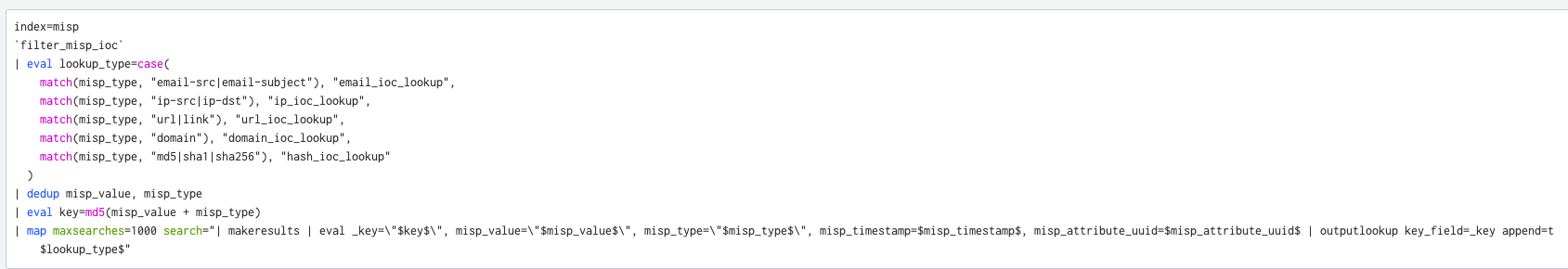

The final step is to iterate over the data and distribute the results to the corresponding lookup type. As a rule of thumb, the map() command in Splunk may not offer the best performance, but its suitability depends on the specific use case. If it’s utilized for performing less resource-intensive operations like outputlookup, it could be the optimal choice.

The final Splunk query. Can be run manually or scheduled to run automatically as a saved search

The screenshot above depicts the final format of the Splunk query used for dynamically populating lookup tables. This search query utilizes the map command to execute a subsearch, which is configured to run up to 1,000 times. You should adjust the ‘maxsearches’ parameter based on the search frequency and the number of indicators being ingested in your environment.

The subsearch starts with the makeresults command, setting up an empty data set. The eval command then populates this data set, creating specific fields (_key, misp_value, misp_type, misp_timestamp, misp_attribute_uuid) and assigning values from each MISP event to these fields. The fields correspond to columns in the KV stores, organizing the data before it is sent to the lookup table.

Finally, we use the outputlookup command to write the results to the corresponding lookup table. The variable $lookup_type$ dynamically determines the name of the lookup table to which data will be written.

In the outputlookup command, we add key_field=_key option to specify which field should act as a unique identifier in the KV store lookup table. This helps determine whether to update an existing record or add a new one. The append=t option ensures that new data is added to the existing table rather than replacing it, keeping all previously stored data.

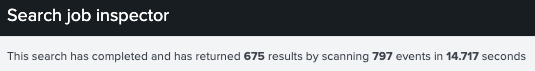

The map command ran over 675 events and took only 14.7 seconds

As we can see from the Search job inspector, we have reasonable search performance, even though we’re applying the map command to hundreds of events.

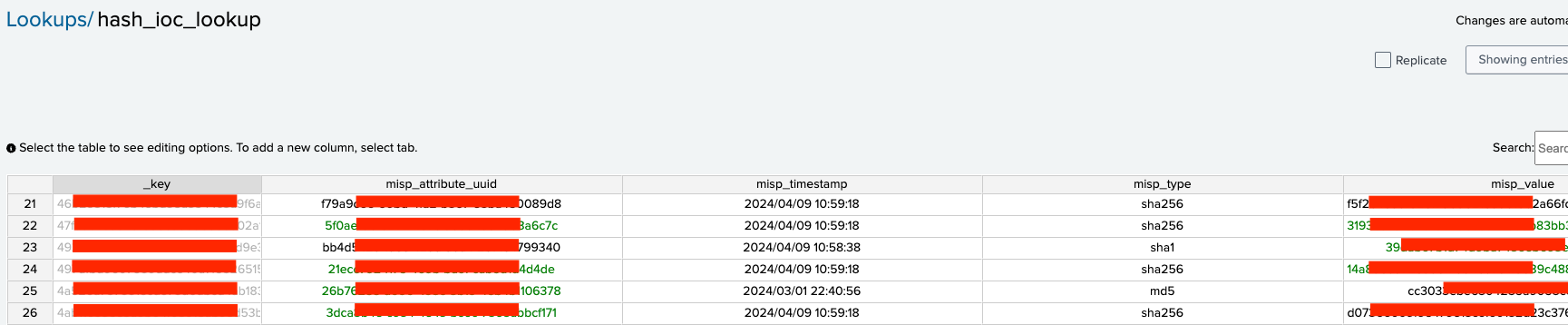

Example of IOC data dynamically stored in the hash_ioc_lookup by the Splunk query we developed

Final thoughts

In conclusion, the method presented in this article offers a powerful solution for dynamically populating Splunk’s IOC lookup tables.

By leveraging a single Splunk query, you can streamline the process of updating multiple lookup tables, enhancing workflow efficiency, and reducing the burden of manual updates or maintaining multiple lookup generation searches.

Depending on your needs, you can either run the query manually on demand or configure it as a saved search to run automatically at scheduled intervals.

With the flexibility to adjust parameters and scale the solution to accommodate your evolving needs, you can effectively manage and categorize Indicators of Compromise (IOCs) for improved threat detection and security operations.

Written by Aleksandar Matev